by Mark Harvey

Mark Twain’s two rules for investing: 1) Don’t invest when you can’t afford to. 2) Don’t invest when you can.

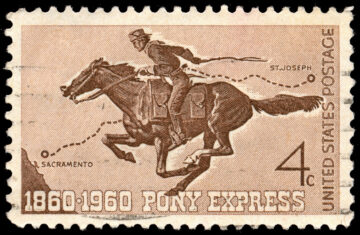

Hemorrhaging money and high burn rates on startups is not something new in American culture. We’ve been doing it for a couple hundred years. Take the pony express, for example. That celebrated mail delivery company–a huge part of western lore–only lasted about eighteen months. The idea was to deliver mail across the western side of the US from Missouri to California, where there was still no contiguous telegraph connection or railway connection. In some ways the pony express was a huge success, even if in only showing the vast amount of country wee brave men could cover on a horse in a short amount of time. I say wee because pony express riders were required to weigh less than 125 pounds, kind of like modern jockeys.

In just a few months, three business partners, William Russell, Alexander Majors, and Wiliam Waddell, established 184 stations, purchased about 400 horses, hired 80 riders, and set the thing into motion. On April 3, 1860, the first express rider left St. Joseph Missouri with a mail pouch containing 50 letters and five telegrams. Ten days later, the letters arrived in Sacramento, some 1,900 miles away. The express riders must have been ridiculously tough men, covering up to 100 miles in single rides using multiple horses staged along the route. Anyone who’s ever ridden just 30 miles in a day knows how tired it makes a person.

But the company didn’t last. For one thing, the continental-length telegraph system was completed in October of 1861 when the two major telegraph companies, the Overland and the Pacific, joined lines in Salt Lake City. You’d think that the messieurs who started the pony express and who were otherwise very successful businessmen would have seen this disruptive technology on the horizon. Maybe they did and they just wanted to open what was maybe the coolest startup on the face of the earth, even if it only lasted a year and a half. Read more »

have instrumental value. That is, the value of given technology lies in the various ways in which we can use it, no more, and no less. For example, the value of a hammer lies in our ability to make use of it to hit nails into things. Cars are valuable insofar as we can use them to get from A to B with the bare minimum of physical exertion. This way of viewing technology has immense intuitive appeal, but I think it is ultimately unconvincing. More specifically, I want to argue that technological artifacts are capable of embodying value. Some argue that this value is to be accounted for in terms of the

have instrumental value. That is, the value of given technology lies in the various ways in which we can use it, no more, and no less. For example, the value of a hammer lies in our ability to make use of it to hit nails into things. Cars are valuable insofar as we can use them to get from A to B with the bare minimum of physical exertion. This way of viewing technology has immense intuitive appeal, but I think it is ultimately unconvincing. More specifically, I want to argue that technological artifacts are capable of embodying value. Some argue that this value is to be accounted for in terms of the