by Michael Klenk

Someone else gets more quality time with your spouse, your kids, and your friends than you do. Like most people, you probably enjoy just about an hour, while your new rivals are taking a whopping 2 hours and 15 minutes each day. But save your jealousy. Your rivals are tremendously charming, and you have probably fallen for them as well.

Someone else gets more quality time with your spouse, your kids, and your friends than you do. Like most people, you probably enjoy just about an hour, while your new rivals are taking a whopping 2 hours and 15 minutes each day. But save your jealousy. Your rivals are tremendously charming, and you have probably fallen for them as well.

I am talking about intelligent software agents, a fancy name for something everyone is familiar with: the algorithms that curate your Facebook newsfeed, that recommend the next Netflix film to watch, and that complete your search query on Google or Bing.

Your relationships aren’t any of my business. But I want to warn you. I am concerned that you, together with the other approximately 3 billion social media users, are being manipulated by intelligent software agents online.

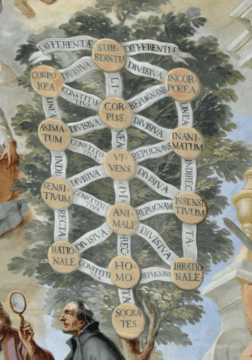

Here’s how. The intelligent software agents that you interact with online are ‘intelligent agents’ in the sense that they try to predict your behaviour taking into account what you did in your online past (e.g. what kind of movies you usually watch), and then they structure your options for online behaviour. For example, they offer you a selection of movies to watch next.

However, they do not care much for your reasons for action. How could they? They analyse and learn from your past behaviour, and mere behaviour does not reveal reasons. So, they likely do not understand what your reasons are and, consequently, cannot care for it.

Instead, they are concerned with maximising engagement, a specific type of behaviour. Intelligent software agents want you to keep interacting with them: To watch another movie, to read another news-item, to check another status update. The increase in the time we spend online, especially on social media, suggests that they are getting quite good at this. Read more »

Someone else gets more quality time with your spouse, your kids, and your friends than you do. Like most people, you probably enjoy just about an hour, while your new rivals are taking a whopping 2 hours and 15 minutes each day. But save your jealousy. Your rivals are tremendously charming, and you have probably fallen for them as well.

Someone else gets more quality time with your spouse, your kids, and your friends than you do. Like most people, you probably enjoy just about an hour, while your new rivals are taking a whopping 2 hours and 15 minutes each day. But save your jealousy. Your rivals are tremendously charming, and you have probably fallen for them as well.