by Joseph Shieber

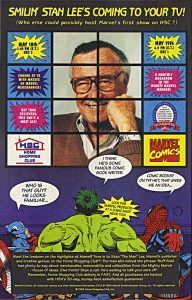

If you listened closely to the songs of praise in honor of Stan Lee, the man who is taken to be largely responsible for the present superhero-mad moment in popular culture, you might have heard an undertone of discord.

As Vox’s Alex Abad-Santos noted, Lee’s notoriety in the popular imagination as the originator of many of the most beloved characters of the Marvel Comic Universe was matched within the narrower comic book community by his reputation for failing to give adequate credit to the artists and writers with whom he worked.

Abad-Santos points to a 2016 piece in Vulture by Abraham Riesman, in which Riesman painstakingly documents the ways that Lee maneuvered to divert credit from his comic hero co-creators – most notably perhaps Jack Kirby and Steve Ditko – to foster the legend that Lee was single-handedly responsible for Marvel Comics’ biggest successes.

As Riesman writes,

Lee and Marvel demonstrably — and near-unforgivably — diminished the vital contributions of the collaborators who worked with him during Marvel’s creative apogee. That is part of what made Lee a hero in the first place, but he’s lived long enough to see that self-mythologizing turn against him. Over the last few decades, the man who saved comics has become — to some comics lovers, at least — a villain.

Riesman describes how Stan Lee’s legend rests in large part on an explosion of creative production “in the mid-’60s, when Lee and [Steve] Ditko were working together on Spider-Man and Doctor Strange stories and Lee and [Jack] Kirby were working together on nearly everything else, including The Avengers, The X-Men, and The Fantastic Four.”

The complicating factor in that legend is that Lee, Ditko, and Kirby have vastly different recollections of what that “working together” amounted to.

As Lee would have it, he completed the lion’s share of the work. That included fleshing out the characters, conceiving of the plots and then, after the artists had drawn up the comic book frames according to Lee’s plans, writing the dialogue that appeared in the characters’ speech bubbles.

Here’s how Riesman describes the controversy:

[Kirby and Ditko], in Lee’s retelling, were fantastic and visionary, but secondary to his own vision. According to Kirby and Ditko, that’s hogwash. Ditko has retreated into a hermetic existence in midtown Manhattan, where he types up self-promoting mail-order pamphlets claiming Lee had only the most threadbare initial ideas for Spider-Man, and that Ditko is the one who fleshed the iconic character out into what he is today, then came up with most of the plot beats in any given story. Kirby, from the time he left Marvel in 1970 until his death in 1994, swore up and down that Lee was a fraud on an even larger scale: Kirby said he himself was the one who had all the ideas for the Hulk, Iron Man, Thor, and the rest, and that Lee was outright lying about having anything to do with them. What’s more, he said Lee was little more than a copy boy, filling in dialogue bubbles after Kirby had done the lion’s share of the conceptual and writing work for any given issue.

Nor was the controversy merely a matter of who deserved credit for the creation of so many beloved comic book heroes; millions – if not tens or hundreds of millions – of dollars were on the line as well. As Asher Elbein writes in his 2016 article “Marvel, Jack Kirby, and the Comic-Book Artist’s Plight”, the fight between Kirby’s heirs and Marvel over Kirby’s role in the creation of those seminal comic book characters and their stories had broader implications for many others working in the comic industry. (Marvel settled with Kirby’s heirs in 2014, rather than allowing the suit to proceed.)

I was thinking about the darker side of Lee’s legend and his failure adequately to acknowledge the contributions of his creative teams as I was reading a recent excerpt from Albert-László Barabási’s new book The Formula: The Universal Laws of Success. In that excerpt, which appears in Nautilus, Barabási describes an algorithm for predicting credit allocation in scientific research, an algorithm created by Hua-Wei Shen, one of the computer scientists working in Barabási’s lab.

Barabási had posed to Shen the problem of coming up with an algorithm that, when presented with all of the scientific papers on which a given Nobel Prize was based, could correctly predict the Nobel Prize winners from all of the co-authors – sometimes numbering over a hundred scientific co-contributors.

Compounding the problem was the fact that different scientific disciplines have different credit practices. In physics, for example, authors are often listed alphabetically. In biology, on the other hand, the leader of the research team responsible for the article is often listed last.

Shen’s algorithm was remarkably successful, and became the subject of Shen and Barabási’s 2014 paper in the Proceedings of the National Academy of Sciences, “Collective credit allocation in science”. (For more on one of the few cases in which the algorithm failed – the case of Douglas Prasher and the 2008 Nobel Prize in Chemistry – read Barabási.)

For anyone familiar with the story of Stan Lee at Marvel, and how, in the popular imagination, it is Lee alone who is responsible for the myriad characters populating the Marvel Comic Universe, what Shen’s algorithm found should come as no surprise.

Here’s Barabási’s description:

When we allocate credit to the members of a team, who gets the credit has nothing to do with who actually did the work. We don’t dole out reward on the basis of who came up with the idea in the first place, who slaved for weeks, who showed up to meetings to graze the coffee and doughnuts, who jumped in at the last minute with a crucial suggestion, who had the eureka moment, or who yammered on and on but didn’t really contribute anything. The algorithm accurately selected Nobel Prize winners and handed them the win not by figuring out who did what. Rather, it did so by detecting how peers in the discipline paid attention to the work of some of the coauthors and ignored the work of the others. The predictive accuracy of the algorithm led us to our next insight about teams: Credit for teamwork isn’t based on performance. Credit is based on perception. Which makes perfect sense if we remember that success is a collective phenomenon, centered on how other people perceive our performance. Our audiences and colleagues allocate credit based on their perceptions of our related work and the body of work produced by our collaborators.

In particular, here’s one of the ways that the algorithm that Shen and Barabási published predicts that the scientific community would misallocate credit. The algorithm rewards a sustained track record of work in a particular area. So a polymathic genius who flits from research group to research group, sowing the seeds for many remarkable results but not bothering to do the grunt work on any of them, wouldn’t get the accolades for those results. (Here’s looking at you, Johnny von Neumann!)

More generally, though, what this means is that the sorts of skills that will result in recognition by the Nobel prize committee – focus on a particular area of investigation, networking skills, ability to organize and direct the research of a lab on a question of interest – are not the only sorts of skills that might be important in the discovery of scientific knowledge. For that reason, then, though the Nobel prize winners are unquestionably deserving of their accolades, the way the Prizes are awarded elides the contributions of other researchers.

Furthermore, in cases in which prejudice contributes to “how others perceive our performance”, these facts about the allocation of credit will work particularly to the detriment of members of the groups at the receiving end of that prejudice.

For example, in the Nautilus piece, Barabási discusses the case of women in economics:

The data shows that women economists who exclusively work alone are just as likely to receive tenure as men. … Yet a gap suddenly appears once a woman coauthors a paper, and the chances only widen with each collaborative project she participates in. Instead of increasing her odds, every coauthored paper she contributes to lowers them. The effect is so dramatic, in fact, that women who exclusively collaborate face a yawning tenure chasm. The research shows that when women coauthor, they’re accorded far less than half the usual benefits of authorship. And when women coauthor exclusively with men, they see virtually no gains. In other words, female economists pay an enormous penalty for collaborating. … From a tenure perspective, if you’re a female economist publishing with men, you might as well not publish at all.

So much for the connection between Stan Lee and the Nobel Prize. Where does epistemology come in?

In my piece for 3QD last month, I wrote about the way in which social mechanisms provide a better explanation for how it is that we can achieve knowledge through our reliance on the sources that provide us with information.

This focus on mechanisms or processes allows us to deemphasize the reliance on individual informants and to shift the focus onto the ways that multiple people – even, in the case of Big Science, sometimes literally hundreds of people – can collaborate to discover and transmit knowledge.

The requirements for the Nobel Prizes, which limit the number of awardees in a given category to three, are symptomatic of a cognitive bias that personifies the processes involved in knowledge discovery and transmission. (Here’s looking at you, Fundamental Attribution Error!)

The Nobel Committee does have one other recourse for rewarding larger groups — that of rewarding whole organizations, such as Médecins Sans Frontières or the Office of the United Nations High Commissioner on Refugees (UNHCR). But this is actually also emblematic of the same cognitive bias. We like corporate entities because we can think of them in personal terms — as is evidenced by Supreme Court decisions like Citizens United or Hobby Lobby.

Of course, collaborative work is not a new phenomenon. However, the study of epistemology failed to keep pace with the rise of specialization and the spread of knowledge networks in the 19th century. Instead, much epistemology even now — in the 21st century — is still focused on the solitary victim of Descartes’s Evil Demon. Only a change of focus that addresses the ways in which our social — and technological –systems contribute to knowledge acquisition can result in an epistemology that is adequate for our current moment. As Stan Lee would say, “Excelsior”.