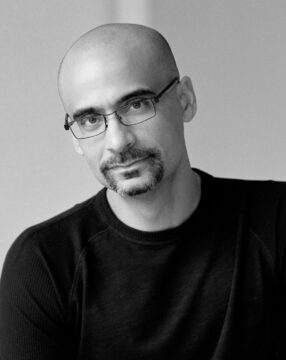

by Scott Samuelson

When I turned fifty, I went through the usual crisis of facing that my life was—so to speak—more than half drunk. After moping a while, one of the more productive things I started to do was to write letters to people living and dead, people known to me and unknown, sometimes people who simply caught my eye on the street, sometimes even animals or plants. Except in rare cases, I haven’t sent the letters or shown them to anyone.

When I turned fifty, I went through the usual crisis of facing that my life was—so to speak—more than half drunk. After moping a while, one of the more productive things I started to do was to write letters to people living and dead, people known to me and unknown, sometimes people who simply caught my eye on the street, sometimes even animals or plants. Except in rare cases, I haven’t sent the letters or shown them to anyone.

Writing personal letters without any designs has been psychologically helpful. After venting any rage or confusion, I’ve found that what I have to say generally boils down to two things: I love you and I’m sorry. In the face of my mortality, it’s been good to be reminded of the strength of those two sentences and the connections built on their foundation. There’s something more than proper or quaint in addressing someone at the start of a letter as “Dear.”

Because our current president occupies more of my mental space than I’d prefer, he’s one of the people I’ve written a letter to. Dear Donald Trump. Then I ticked off a laundry list of grievances, going all the way back to the 80s.

When it finally dawned on me that I was addressing him as a figure rather than as a person, I began to wonder if I could talk to him human to human. Here’s a snippet of what I went on to write:

I’m having real troubles believing that you think of similar things as I do when you think about what makes life worth living. Am I wrong? For instance, that cry inside John Coltrane’s tone and Dolly Parton’s voice—do any songs at all break your heart with their beauty? Or what about friendship? When I recently visited a dear old friend of mine who lives in Brussels, my whole being flooded with joy when I saw his face at the airport. Do you have any such friends? What about a first love, a sweet pure love before puberty, before you even longed to kiss her (much less grab her by the pussy)—do you still dream of her? When you see, say, the first little daffodil after what has felt like three straight months of February, do you feel glad to be alive?

I continued like that in search of how we might connect over a fundamental good, away from the political shitshow that he’s in part responsible for. Read more »

Sughra Raza. First Snow. Dec 14, 2025.

Sughra Raza. First Snow. Dec 14, 2025. One Monday in 1883 Southeast Asia woke to “the firing of heavy guns” heard from Batavia to Alice Springs to Singapore, and maybe as far as Mauritius, near Africa.

One Monday in 1883 Southeast Asia woke to “the firing of heavy guns” heard from Batavia to Alice Springs to Singapore, and maybe as far as Mauritius, near Africa.

Like many other video gamers (nearly eight million, in fact), I have spent no small portion of recent weeks in the robot-infested, post-diluvian wastes of late-22nd-Century Italy, looting remnants of a collapsed civilization while hoping that a fellow gamer won’t sneak up and murder me for the scraps in my pockets. This has been much more fun than the preceding description might lead you to believe, if you are not a fan of such grim fantasy playgrounds. It has also, interestingly, afforded rather heart-warming displays of the better side of human nature, despite the occasional predatory ambush or perfidious betrayal. It helps somewhat that nobody really dies in this game; they just get “downed” and then “knocked out” if not revived in time, leaving behind whatever gear they were carrying (except for what they were able to hide in their “safe pocket”, the technical and anatomical details of which are left to the player’s imagination).

Like many other video gamers (nearly eight million, in fact), I have spent no small portion of recent weeks in the robot-infested, post-diluvian wastes of late-22nd-Century Italy, looting remnants of a collapsed civilization while hoping that a fellow gamer won’t sneak up and murder me for the scraps in my pockets. This has been much more fun than the preceding description might lead you to believe, if you are not a fan of such grim fantasy playgrounds. It has also, interestingly, afforded rather heart-warming displays of the better side of human nature, despite the occasional predatory ambush or perfidious betrayal. It helps somewhat that nobody really dies in this game; they just get “downed” and then “knocked out” if not revived in time, leaving behind whatever gear they were carrying (except for what they were able to hide in their “safe pocket”, the technical and anatomical details of which are left to the player’s imagination).