by Bill Benzon

Distant Reading and Embracing the Other

As far as I can tell, digital criticism is the only game that's producing anything really new in literary criticism. We've got data mining studies that examine 1000s of texts at once. Charts and diagrams are necessary to present results and so have become central objects of thought. And some investigators have all but begun to ask: What IS computation, anyhow? When a died-in-the-wool humanist asks that question, not out of romantic Luddite opposition, but in genuine interest and open-ended curiosity, THAT's going to lead somewhere.

While humanistic computing goes back to the early 1950s when Roberto Busa convinced IBM to fund his work on Thomas Aquinas – the Index Thomisticus came to the web in 2005 – literary computing has been a backroom operation until quite recently. Franco Moretti, a professor of comparative literature at Stanford and proprietor of its Literary Lab, is the most prominent proponent of moving humanistic computing to the front office. A recent New York Times article, Distant Reading, informs us

…the Lit Lab tackles literary problems by scientific means: hypothesis-testing, computational modeling, quantitative analysis. Similar efforts are currently proliferating under the broad rubric of “digital humanities,” but Moretti's approach is among the more radical. He advocates what he terms “distant reading”: understanding literature not by studying particular texts, but by aggregating and analyzing massive amounts of data.

Traditional literary study is confined to a small body of esteemed works, the so-called canon. Distant reading is the only way to cover all of literature.

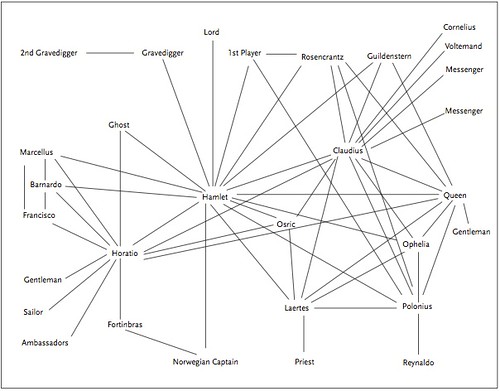

But Moretti has been also investigating drama, play by play, by creating diagrams depicting relations among the characters, such as this diagram of Hamlet:

The diagram gives a very abstracted view of the play and so is “distant” in one sense. But it also requires Moretti to attend quite closely to the play, as he sketches the diagrams himself and so must be “close” to the play.

His most recent pamphlet, “Operationalizing”: or, the Function of Measurement in Modern Literary Theory (December 2013, PDF), discusses that work and concludes by observing: “Computation has theoretical consequences—possibly, more than any other field of literary study. The time has come, to make them explicit” (p. 9).

If such an examination is to take place the profession must, as Willard McCarty asserted in his 2013 Busa Award Lecture (see below), embrace the Otherness of computing:

I want to grab on to the fear this Otherness provokes and reach through it to the otherness of the techno-scientific tradition from which computing comes. I want to recognize and identify this fear of Otherness, that is the uncanny, as for example, Sigmund Freud, Stanley Cavel, and Masahiro Mori have identified it, to argue that this Otherness is to be sought out and cultivated, not concealed, avoided, or overcome. That it's sharp opposition to our somnolence of mind is true friendship.

In a way it is odd that we, or at least the humanists among us, should regard the computer as Other, for it is entirely a creature of our imagination and craft. We made it. And in our own image.

Can the profession even imagine much less embark on such a journey?

Reading and Interpretation as Explanation

To appreciate what's at stake we need to consider how academic literary critics think of their craft. This passage by Geoffrey Hartman, one of Yale's so-called “Gang of Four” deconstructive critics, is typical (The Fate of Reading, 1975, p. 271):

I wonder, finally, whether the very concept of reading is not in jeopardy. Pedagogically, of course, we still respond to those who call for improved reading skills; but to describe most semiological or structural analyses of poetry as a “reading” extends the term almost beyond recognition.

Note first of all that “reading” doesn't mean quite what it does in ordinary use. In standard academic usage a written exegesis is called “a reading.”

In that passage Hartman is drawing a line in the sand. What he later calls the “modern ‘rithmatics'—semiotics, linguistics, and technical structuralism” do not qualify as reading, even in the extended professional sense. Nor, it goes without saying, would Moretti's “distant reading” qualify as reading. What each of these methods does is to objectify the text and thereby “block” the critic's “identification” with and entry into the text's world.

I cannot place too much stress on how foundational this sense of reading is to academic literary criticism. For the critic, to “read” a text is to explain it. The hidden meaning thus found is assumed to the animating force behind the text, the cause of the text. Objectification gets in the way of the critic's identification with the text and so displaces this “reading” process.

By the time Hartman wrote that essay, the mid-1970s, the interpretative enterprise had become deeply problematic. We can trace the problem to the so-called New Critics, who rose to prominence after World War II with their practice of “close” reading. These critics insisted on the autonomy of the text. Literary texts contain their meaning within themselves and so must be analyzed without reference to authors or socio-historical context. The text stands alone.

The problem is that “reading” presupposes some agent behind the text. How then can interpretation proceed when authors and context have been ruled out of court? As a practical matter, it turns out that as long as critics share common assumptions, they can afford the pretense that texts stand alone. That's what the New Critics and much of the profession did.

But by the 1960s critics began noticing that, hey, we don't always agree in our readings and there's no obvious way to reconcile the differences. Critics began to suspect that each in her own way was reading herself into this supposedly autonomous text. That's when the French landed in Baltimore – at the (in)famous structuralism conference at Johns Hopkins in 1966 – and literary criticism exploded with ideas and controversy.

A Mid-1970s Brush with the Other

Hartman published that essay in 1975, roughly the mid-point of this process. There are other texts from this period that illustrate how literary criticism brushed up against objectification but then deflected it.

Eugenio Donato's 1975 review of Lévi-Strauss's Mythologiques is particularly important. On the one hand, as Alan Liu has pointed out in a recent essay, “The Meaning of the Digital Humanities” (PMLA 128, 2013, 409-423; see video below) Lévi-Strauss's structuralist anthropology was “a midpoint on the long modern path toward understanding the world as system” (p. 418) – which, we'll see later on, has brought the critical enterprise to the point of collapse. On the other, Donato was one of the organizers of the 1966 structuralism.

Donato's review appeared in Diacritics (vol. 5, no. 3, p. 2) and was entitled “Lévi-Strauss and the Protocols of Distance.” Notice that trope of distance, which has served to indicate the relationship of critic to text from the New Critics to Moretti. Donato tells us that he isn't interested in Lévi-Strauss's accounts of specific myths, that is, his “technical structuralism” in Hartman's phrase. What interests Donato is that “despite Lévi-Strauss' repeated protestations to the contrary, the anthropologist is not completely absent from his enterprise.”

What interests Donato is the way Lévi-Strauss's text itself is like a literary text and so can be analyzed as one. That is typical of deconstructive criticism and of Lévi-Strauss's reception among literary critics. Beyond reference to binary oppositions few were interested in his analysis of myths or other ethnographic materials. None were interested in abstracting and objectifying literary texts in the way Lévi-Strauss had objectified myths through his use of diagrams, tables, and quasi-mathematical formulas.

A year later Umberto Eco published A Theory of Semiotics (1976) in which he used a computational model devised by Ross Quillian in 1968 and posited it as a basic semiotic model. But Eco didn't use any of the later and more differentiated models developed in the cognitive sciences, nor did any other literary critics. Quillian's model took the form of a network in which concepts were linked to other concepts. And that's what Eco liked, the notion of concepts being linked to other concepts in “a process of unlimited semiosis” (p. 122). What had to be done to make such a model actually work, that didn't interest him.

During this same period Jonathan Culler published Structuralist Poetics (1975), where he observed that linguistic analysis is not hermeneutic (p. 31). On this he agrees with Hartman. He also employed some ideas from Chomskyian linguistics – itself derived from abstract computational considerations, such as the contrast between deep structure and surface structure and the notion of linguistic competence, which became literary competence. But Culler didn't continue down this path; few did.

The Center is Gone

By the turn of the millennium, however, it became increasingly clear that things were rotten in Criticland. In one of his last essays, Globalizing Literary Study (PMLA, Vol. 116, No. 1, 2001, pp. 64-68), Edward Said notes: “An increasing number of us, I think, feel that there is something basically unworkable or at least drastically changed about the traditional frameworks in which we study literature” (p. 64). He goes on (pp. 64-65):

I myself have no doubt, for instance, that an autonomous aesthetic realm exists, yet how it exists in relation to history, politics, social structures, and the like, is really difficult to specify. Questions and doubts about all these other relations have eroded the formerly perdurable national and aesthetic frameworks, limits, and boundaries almost completely. The notion neither of author, nor of work, nor of nation is as dependable as it once was, and for that matter the role of imagination, which used to be a central one, along with that of identity has undergone a Copernican transformation in the common understanding of it.

Thought about that autonomous aesthetic realm presupposes stable, and ultimately traditional, conceptions of the self, the nation, of identity, and the imagination. Given those as a vantage point, the critic can interpret texts and thereby explore the autonomous aesthetic. Without those now shattered concepts that autonomous aesthetic realm is but a phantasm of critical desire.

Post-structuralist criticism had dissolved things into vast networks of objects and processes interacting across many different spatial and temporal scales, from the syllables of a haiku dropping into a neural net through the processes of rendering ancient texts into movies made in Hollywood, Bollywood, or “Chinawood” (Hengdian, in Zhejiang Province) and shown around the world.

Authors became supplanted by various systems. Lacanians revised the Freudian unconscious. Marxists found capitalism and imperialism everywhere. And signs abounded, bewildering networks of signs pointing to signs pointing to signs in enormous tangles of self-referential meaning. Identity criticism stepped into the breech opened by deconstruction. Feminists, African-Americans, gays and lesbians, Native Americans, Latinos, subaltern post-colonialists and others spoke from their histories, reading their texts against and into the canon.

If it has become so difficult to gain conceptual purchase on the autonomous aesthetic realm, then perhaps we need new conceptual tools. Computation provides tools that allow us to examine large bodies of texts in new ways, ways we are only beginning to utilize. Computation gives us ways to think about systems on many scales, about how they operate in a coherent way, for fail to do so. It gives us tools to examine such systems, but also to simulate them.

Current Prospects: Into the Autonomous Aesthetic

And so we arrive back at Moretti's call for an exploration of the implications of computing for literary criticism. That the profession has no other viable way forward does not imply that it will continue the investigation. It could after all choose to languish in the past.

What reason do we have to believe that it will choose to move forward?

The world has changed since the 1970s. On the one hand, the critical approaches that were new and exciting back then have collapsed, as Said has indicated. On the other hand, back then the computer was still a distant and foreign object to most people. That's now changed. Powerful computers are ubiquitous. Everyone has at least one, if not several – smartphones, remember, are powerful computers.

Even more importantly, we now have a cohort of literary investigators who grew up with computers and for whom objectified access to texts is familiar and comfortable. Geoffrey Hartman was worried about getting, and staying, “close” to the text. But Moretti talks of “distant” reading and so do others. Can it be long before critics figure out that distance is really objectification and that it's not evil?

Furthermore we now have a generation of younger scholars who draw on cognitive science and evolutionary psychology, disciplines that didn't exist when the French landed in Baltimore in 1966. Computation played a direct role in cognitive science by serving as a model for the implementation of mind in matter. And, via evolutionary game theory, computation is the intellectual driver behind evolutionary psychology as well.

Finally, over the last couple of years various digital humanists have sensed that the field lacks a substantial body of theory. And there is a dawning awareness that this theory doesn't have to be some variation on post-structuralist formations trailing back into the 1960s. Perhaps we need some new kinds of theory.

In a recent article, Who You Calling Untheoretical? (Journal of Digital Humanities Vol. 1, No. 1 Winter 2011) Jean Bauer notes that the database is the theory:

When we create these systems we bring our theoretical understandings to bear on our digital projects including (but not limited to) decisions about: controlled vocabulary (or the lack thereof), search algorithms, interface design, color palettes, and data structure.

Finally, in order use the computer as a model for thought one doesn't have to adopt the view, mistaken in my opinion, that brains are computers. Whatever its limitations, the computer, as idea, as abstract model, is the most explicit model we have for the mind. Or, to be more precise, it is the most explicit model we have for how a mind might be embodied in matter, such as a brain.

That's a way forward, a rich theoretical opportunity. But it's not a matter of latching on to a handful of ideas. It's a matter of long-term investigation into territory that is as yet unexplored.

For the nature of computing itself is still fraught with mystery. It is up to us to redefine it for the theoretical investigation and study of the humanities.

* * * * *

DH2013 Busa Award Lecture by Willard McCarty:

Meaning of the Digital Humanities – Alan Liu:

* * * * *

Bill Benzon's blog, New Savanna. Posts about digital humanities.